Law enforcement increasingly enlists artificial intelligence to fight crime

It’s not to the point of Hollywood’s “Minority Report,” in which “Precrime” police use foreknowledge to arrest people before they commit crimes, but police across the country are turning to artificial intelligence to carry out their duties.

More than half of police agencies use AI or machine learning tools for data analysis, and it’s proving to be a natural fit, said Scott White, director of George Washington University’s Cybersecurity Program and Cyber Academy.

“Police respond to crime through crime forecasting or intelligence-led policing,” Mr. White said. “We can actually move to the area where we may be able to actually prevent criminal conduct from occurring with certain types of crime.”

As it cruises the streets, a police car equipped with an AI-powered camera can read license plates and check the data against the department’s files, maybe spotting a stolen vehicle or one registered to someone with outstanding warrants.

AI can sift through hundreds of hours of body camera footage to detect key moments or spot trends otherwise missed in police encounters.

Facial recognition software may be coming soon, giving officers another tool to spot suspects or other potential community dangers.

SEE ALSO: AI saves far more lives than it takes — for now

“You could have the acknowledgment that someone is carrying a firearm, depending on what pieces of kit are running along with that camera,” Mr. White said.

The events at the U.S. Capitol on Jan. 6, 2021, give some sense of available technology.

The FBI collected more than 100,000 images and tens of thousands of video files from the Capitol’s security systems and from social media posts and phone records. They constructed a history of people whose phones were in the vicinity of the Capitol while the mob rampaged through the building.

Experts said AI helped agents sort through the data, make connections and track movements before, during and after the riot.

As that sort of capability bleeds into more mundane crimes, civil liberties advocates say combining AI technology with the power of policing is a recipe for invasive law enforcement.

“Just as analog surveillance historically has been used as a tool for oppression, policymakers and the public must understand the threat posed by emerging technologies to successfully defend civil liberties and civil rights in the digital age,” the Electronic Frontier Foundation says in its evaluation of “street-level surveillance.”

Rounding up suspects after a crime is one thing.

AI scientists are working on tools to predict and hopefully head off Jan. 6-style events or lower-level chaos.

The Michigan State Capitol will soon deploy ZeroEyes, an AI technology designed to detect someone carrying a gun onto the campus. It links into the complex’s surveillance cameras, searches the video and alerts a human when it spots a gun.

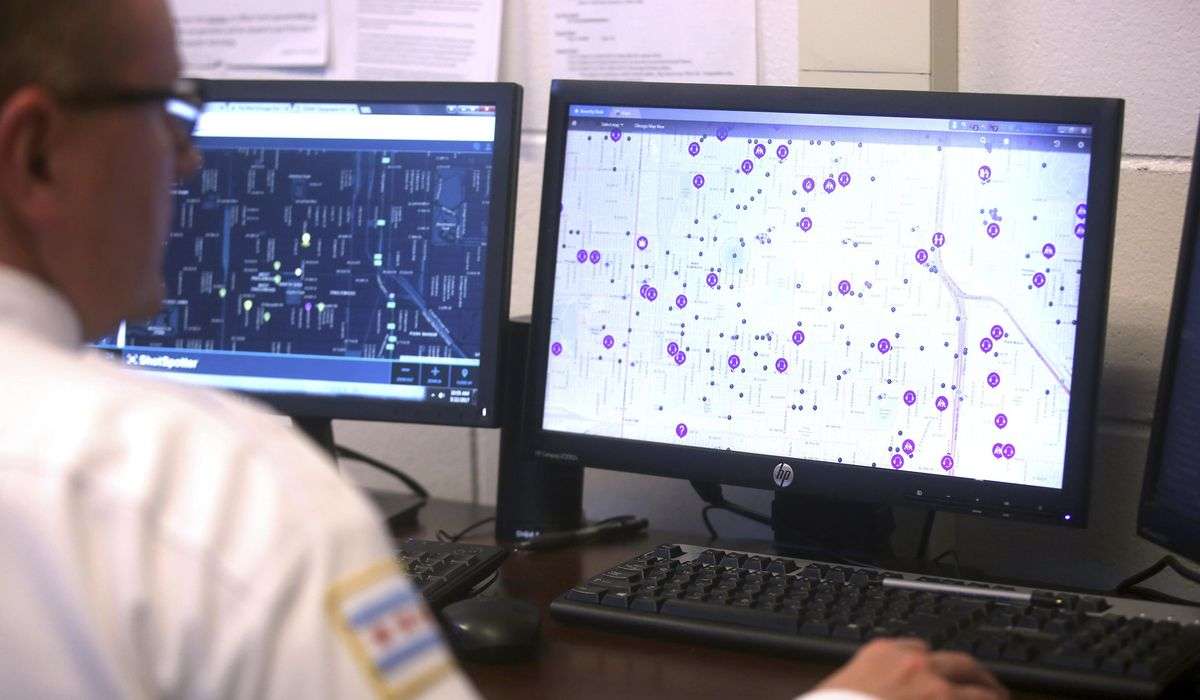

Law enforcement in at least 120 communities use ShotSpotter to pinpoint scenes of gunshots.

A backlash to the ShotSpotter technology is growing.

In Durham, North Carolina, the City Council recently voted to let its contract expire after a one-year pilot program.

From Dec. 15, 2022, to Dec. 15, 2023, more than 5,000 shots were detected and analyzed in a 3-mile radius of the city. Twenty-one guns were recovered, and 22 arrests were made.

The Durham County Fraternal Order of Police objected to the council’s decision. It said ShotSpotter was 95% effective and helped police reach crime scenes faster than responding to traditional 911 calls.

In Chicago, activists have demanded that Mayor Brandon Johnson make good on his campaign promise and cancel the city’s contract. Mr. Johnson cited “human error” and the 2021 killing of a 13-year-old boy shot by police after a pursuit initiated by a ShotSpotter report.